ABSTRACT

Team SCOPE has created an assistive robot for healthcare delivery. The robot is mobile, responds to spoken commands, and possesses Artificial Intelligence (AI). It extracts meanings about the patient’s health from conversations and visual interactions. It summarizes these observations into reports that could be merged with the patient’s Electronic Health Records (EHRs).

This process aids healthcare professionals in delivering better care by augmenting attendance, increasing accuracy of patient information collection, aiding in diagnosis, streamlining data collection, and automating the process of ingesting and incorporating this information into EHR systems. SCOPE’s solution uses cloud-based AI services along with local processing. Using VEX Robotics parts and an Arduino microcontroller, SCOPE created a mobile platform for the robot. The robotic platform implements basic motions and obstacle avoidance. These separate systems are integrated using a Java master program, Node-Red, and IBM Watson cloud services. The resulting AI can be expanded for different applications within healthcare delivery.

VISUAL OBJECT RECOGNITION

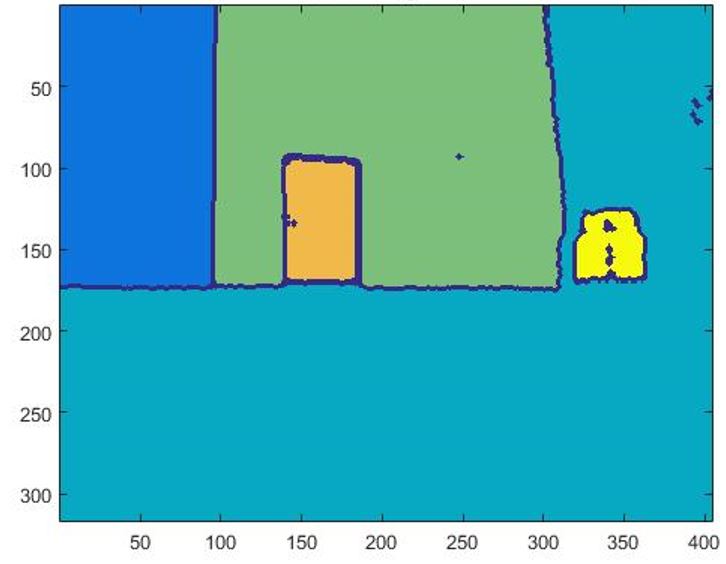

Figure 8 Sobel Regions differentiated

We begin by acquiring the initial depth data, which is saved as a matrix with the data in each position corresponding to the distance of that pixel from the capture plane. We found that our algorithm has difficulty detecting the edge between an object and the floor. This issue was solved by setting the depth data from the floor to zeros. We identified the floor plane using the Kinect’s floor clip plane function and compared points in the depth data to the floor plane. If the distance was below a threshold, we deleted it.

Figure 9 Filtered Sobel Regions

The next step is to determine the bounding boxes of the regions. We filter these results again to remove regions that encompass most of the image as they represent the background and removed regions rather than those we are looking for. We also group regions that are covered by multiple bounding boxes. We can then map these boxes to the color image and crop out sub images. We are more interested in isolating the objects than finding their precise boundaries so we send a slightly larger image that still contains the full object. In our current implementation the segmentation process takes four seconds.

NATURAL LANGUAGE INTERACTION

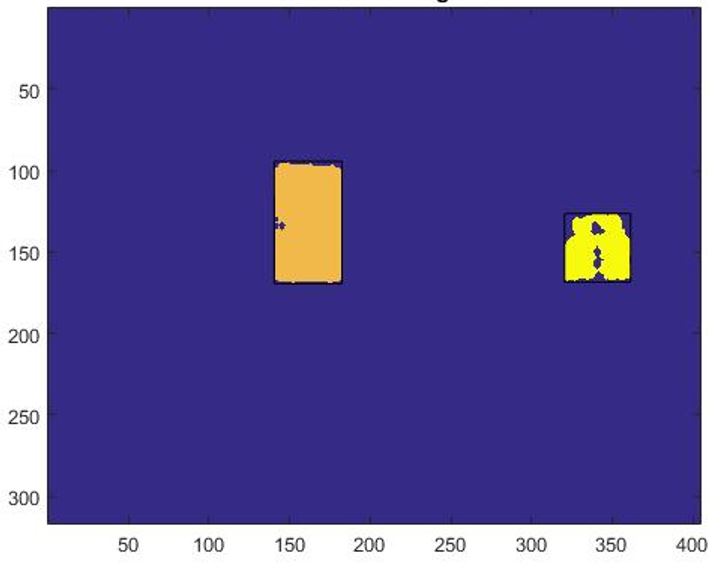

Figure 11 Sample interface for dialog in the IBM Watson Conversation Service

Figure 11 shows an example of a dialog flow created in Watson. Each rectangular box is a node, which is triggered by detection of a specific entity. The console interface was designed for the purposes of increasing ease of use, which presented some challenges for the purposes of this project.

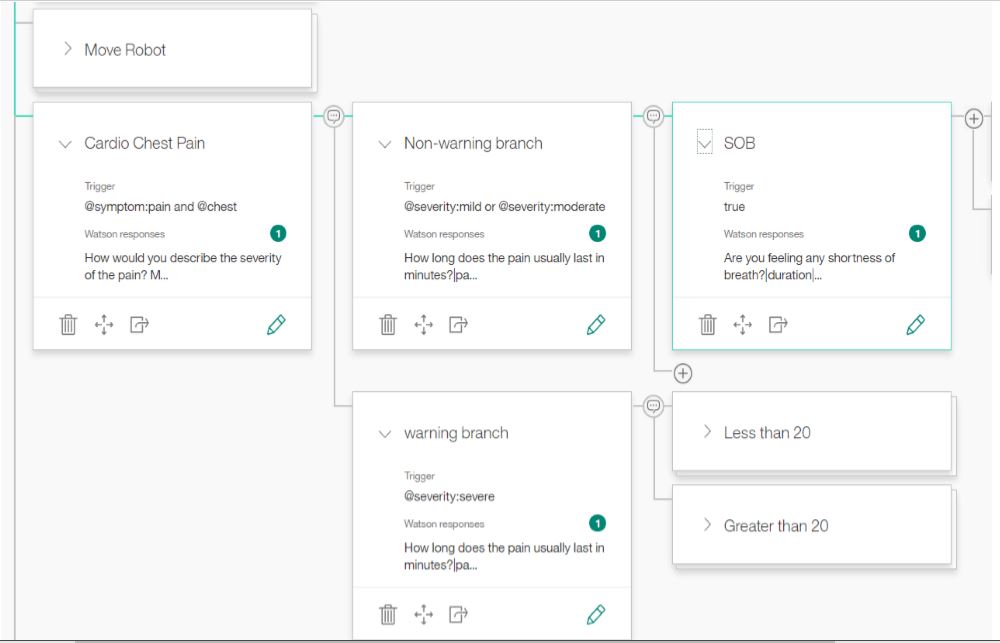

Figure 13 NLP Program runs resulting in successful completions versus error preventing processing of all dialog responses

Figure 13 NLP Program runs resulting in successful completions versus error preventing processing of all dialog responses. Sample size for completion rate was 50 survey responses and 84% resulted in successful program completions.

Out of all the survey responses run through the NLI program, 84% completed full processing of the hypothetical conversation without error, and created appropriate summary charts based on the conversation (Figure 13). The other 16% of survey response sets resulted in error produced by the natural language program failing to run to completion.

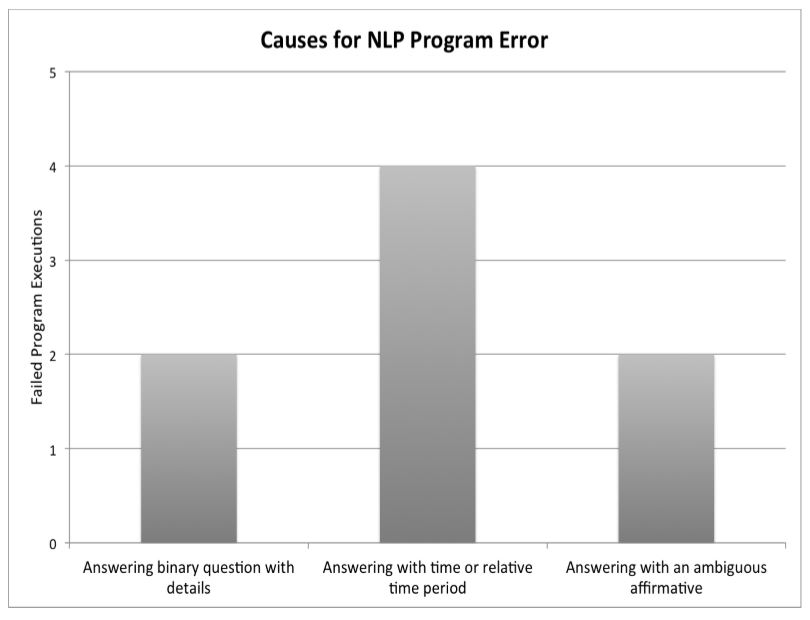

Figure 14 Causes of NLP error in program execution

Figure 14 Causes of NLP error in program execution. Out of 8 runs resulting in errors upon program execution, the responses that cause errors were grouped into three general categories based on a qualitative analysis.

Out of the survey responses resulting in an error during program execution, the most common cause was answering a binary question with a time or relative time period (Figure 14). For example, this includes answering the question “Have you ever had this type of pain before?” with the response “First time”. The errors resulting from answering with details or non-binary affirmative answers occurred with equal frequencies.

MOBILITY

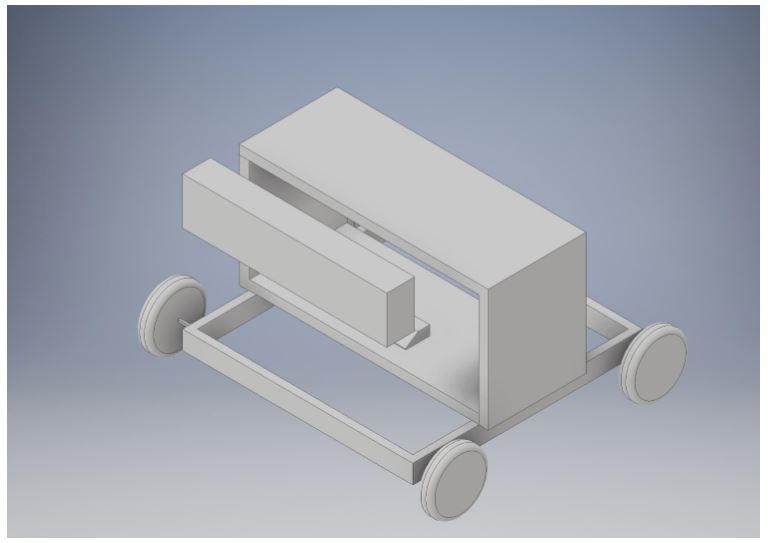

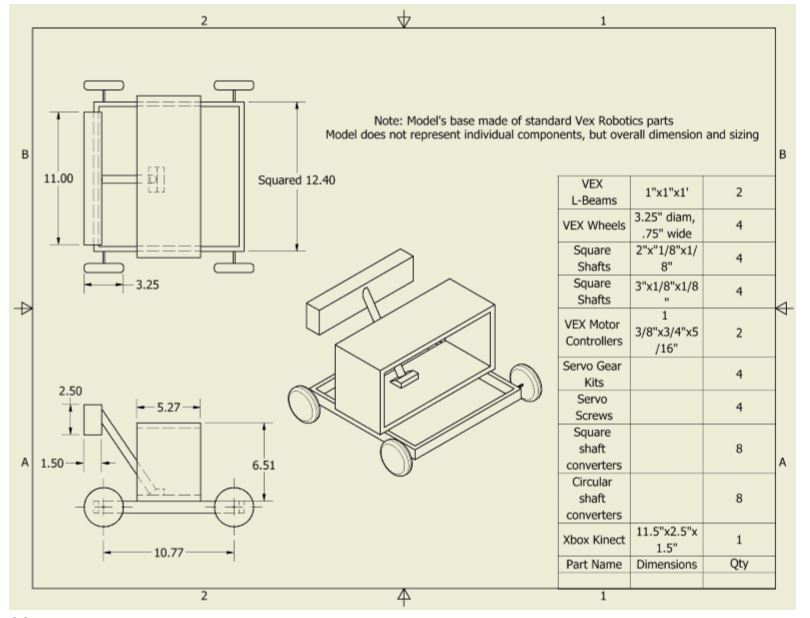

NELI is built on a VEX robotics 12” x 12” chassis with two wooden platforms. The first is mounted on the chassis, with the second constructed 10” above the body for additional hardware mounting, as shown in Figure 17 and Figure 18 below. The Arduino is set up on the lower platform along with the breadboards. The distance sensor is mounted on the front of the robotic chassis for collision prevention. The motor controllers are mounted on the underside of the lower platform, and are wired to the Arduino. A tablet stand is mounted on the lower platform and extends forward to hold the Kinect in a forward-facing orientation. The battery and remainder of the electronics are placed on the lower platform.

Figure 17 A basic CAD drawing of the current NELI design, featuring the Kinect mounted on a tablet stand in front of the two wooden platforms

Figure 18 An engineering drawing showcasing views of NELI’s structure from the top, side, and isometric views. This includes a bill of materials shown in the bottom right

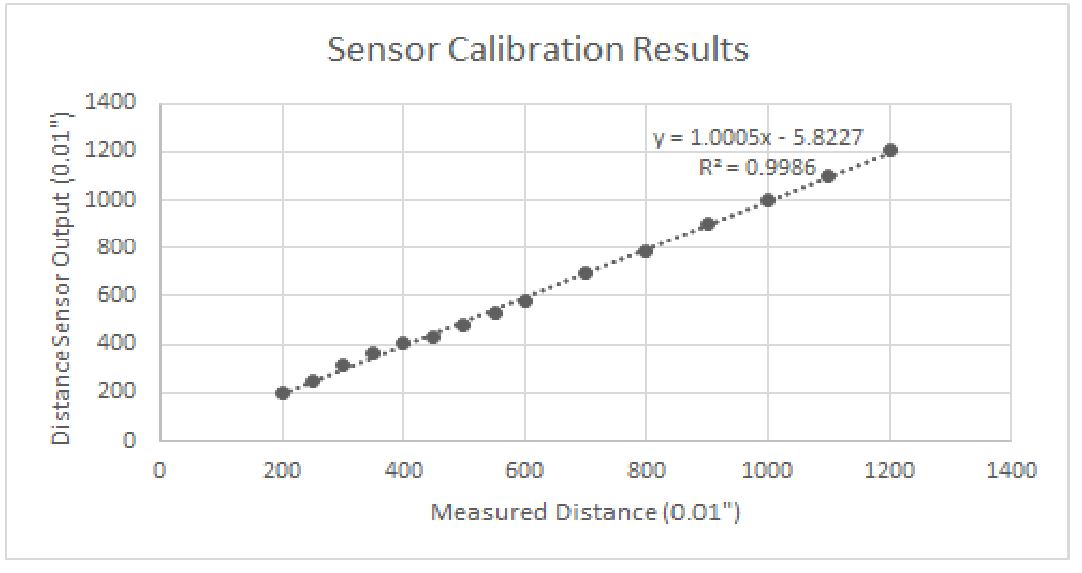

Sensor calibration was limited to these short distances because the Arduino code only required action if the distance sensor detected something less than 8 inches away. After sensor values were acquired at each distance, they were plotted against the actual values. According to Figure 20, the data was then matched with a line of best fit, and the R2 value of each trial was greater than 0.99, indicating a strong linear relation well within one tenth of an inch of the real distance.

Figure 20 Results of a single trial of distance sensor calibration

Figure 20 Results of a single trial of distance sensor calibration. Distances were converted to hundredths of an inch for the sake of accuracy. The trend line has a slope very close to one and an R2 value very close to one, indicating a strong one-to-one relationship.

INTEGRATION

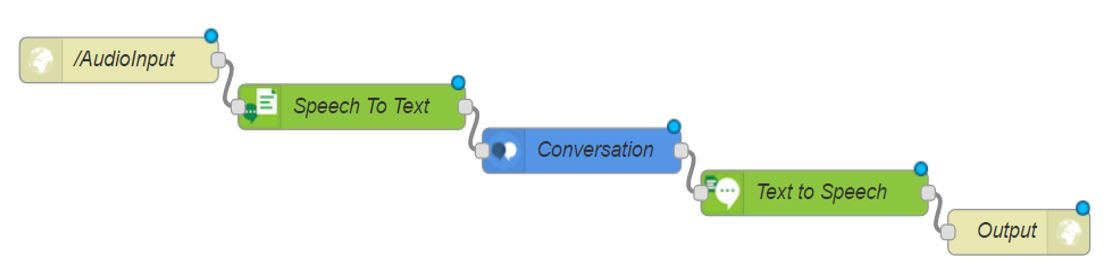

Figure 23 Node-RED flow used to communicate with NLI cloud service and Image Recognition cloud services

Figure 23 Node-RED flow used to communicate with NLI cloud service and Image Recognition cloud services. The flow go from left to right where input is received on the left and sent through various nodes until an output is sent back by the far right nodes. This is an example flow for NELI’s conversation function.

CONCLUSION

Team SCOPE has demonstrated a simple implementation of an intelligent personal assistant through cloud computing services, with functionalities that are compartmentalized such that they could be modified in a modular fashion. Furthermore, the functionalities have been tailored for an application in the field of healthcare. The chest pain conversation and object recognition tests served as a proof of concept for NELI’s ability to converse intelligently based on unique conversations and ability to improve upon IBM Watson’s object recognition accuracy with on-board processing.

Intelligent assistants could create a significant positive impact by performing some of the routine work typically performed by nurses, physicians, and physician’s assistants. NELI’s capabilities have been individually tested, demonstrating their accuracy and the viability of more comprehensive versions. A natural language-based interface has been designed and realized using IBM’s Watson conversation technology. The NLI was tested using sample responses for how accurately patient information is recorded for the physician. The results showed that simple responses were recorded much more accurately than complex responses.

The visual object recognition capabilities have been implemented using a Microsoft Kinect, Watson cloud computing services, and image segmentation Matlab code running locally. The functionality was tested with an assortment of images to measure the robustness of the algorithms. NELI’s mobility platform runs local code on an Arduino microcontroller. The Arduino communicates with the rest of the system to facilitate the actuating abilities of the robot. This allows for the basic movement and distance sensing required for the robot to operate autonomously and effectively in a hospital or clinical environment. The accuracy of the movements performed by this subsystem has been analyzed and shown to be appropriately precise for the purposes we have suggested.

Source: University of California

Authors: John Bachkosky Vi | Alexandra Boukhvalova | Kevin Chou | William Gunnarsson | James Ledwell | Brendan Mctaggart | Xiaoqing Qian | Nicholas Rodgers | John Shi, Jason Yon

>> Matlab Projects Fingerprint Recognition and Face detection for Engineering Students

>> More Matlab Projects using Arduino for Final Year Students

>> 200+ Matlab Projects based on Control System for Final Year Students

>> More Artificial Intelligence Projects using Matlab for Final Year Students

>> Simple Java Projects with Source Code Free Download and Documentation