ABSTRACT

Hough Transform has been widely used for straight line detection in low-definition and still images, but it suffers from execution time and resource requirements. Field Programmable Gate Arrays (FPGA) provide a competitive alternative for hardware acceleration to reap tremendous computing performance. In this paper, we propose a novel parallel Hough Transform (PHT) and FPGA architecture-associated framework for real-time straight line detection in high-definition videos.

A resource-optimized Canny edge detection method with enhanced non-maximum suppression conditions is presented to suppress most possible false edges and obtain more accurate candidate edge pixels for subsequent accelerated computation. Then, a novel PHT algorithm exploiting spatial angle-level parallelism is proposed to upgrade computational accuracy by improving the minimum computational step.

Moreover, the FPGA based multi-level pipelined PHT architecture optimized by spatial parallelism ensures real-time computation for 1, 024 × 768 resolution videos without any off-chip memory consumption. This framework is evaluated on ALTERA DE2-115 FPGA evaluation platform at a maximum frequency of 200 MHz, and it can calculate straight line parameters in 15.59 ms on the average for one frame. Qualitative and quantitative evaluation results have validated the system performance regarding data throughput, memory bandwidth, resource, speed and robustness.

OVERVIEW OF THE PROPOSED EMBEDDED STRAIGHT LINE DETECTION VISION SYSTEM

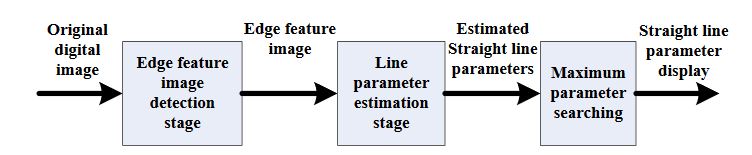

Figure 1. PHT straight line detection algorithm flow

We primarily present a novel PHT-based straight line detection algorithm and the corresponding architecture implementation on FPGA to deal with the acceleration and accuracy problems of classical HT. As Figure 1 shows, there are two main stages in this algorithm: edge feature image detection stage and straight line parameter estimation stage. In order to obtain the edge feature from original digital image for accelerating computation, a resource-optimized Canny edge detection algorithm is adopted.

RESOURCE-OPTIMIZED CANNY EDGE DETECTION METHOD

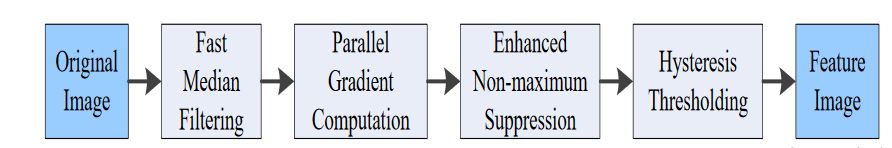

Figure 3 . Flow chart of resource-optimized canny edge detection algorithm

Furthermore, enhanced non-maximum suppression is employed to suppress false edges by presenting more stringent suppression conditions. The modified Canny edge detection flow chart is shown in Figure 3. Accordingly, many parallel computing mechanisms about the fast median filtering, parallel gradient computation, and enhanced non-maximum suppression are explained in detail in the following paragraphs for resource optimization and accuracy improvement.

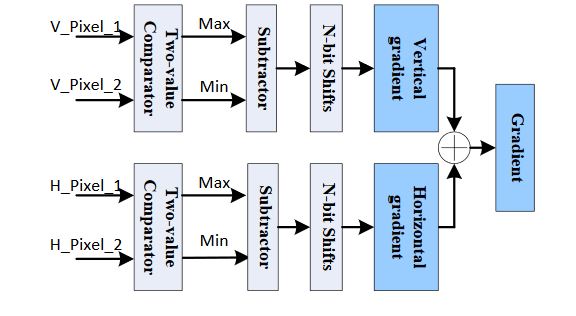

Figure 7. Parallel gradient computation architecture

In order to reduce the computational complexity in parameter coordinates, it is also needed to record whether the gradient is positive or negative as well as its absolute value. As Figure 7 shows, two two-value comparators are used to decide the actual differences of pixel values. Based on this difference, the gradient absolute value is calculated with corresponding subtractor.

MULTI-LEVEL PIPELINED PHT BASED STRAIGHT LINE PARAMETER ESTIMATION AND DECISION

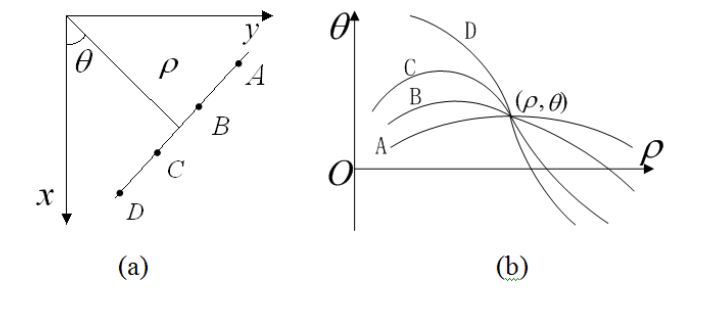

Figure 9. HT representation (a) cartesian coordinate ; (b) parameter coordinate

According to the coordinate correspondence, each point on the straight line in the Cartesian coordinate corresponds to a curve in the parameter space. Meanwhile, all the points on same straight line must correspond to a curve cluster intersected at same point (p, θ) in the parameter space as Figure 9 shows.

EVALUATION

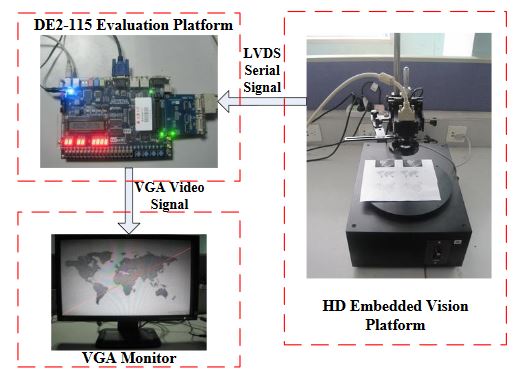

Figure 13. FPGA based embedded straight line detection vision system

The proposed architecture has been evaluated on ALTERA DE2-115 platform with Cyclone IV EP4CE115F29 FPGA and QuartusII version 10.0 synthesis tool, with the maximum operation frequency of 200 MHz, as Figure 13 shows.

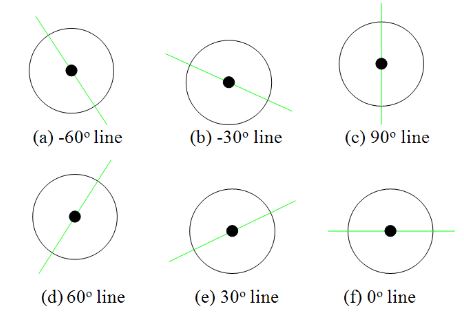

Figure 17 . Accuracy and robustness testing samples

From the above qualitative experimental results, our proposed PHT algorithm can detect single straight line in complex background correctly. In this subsection, we present quantitative experimental results to show the accuracy and robustness of this algorithm and hardware architecture. In Figure 17, six hand-generated testing straight lines are given with the angles of –30°, –60°, 0°, 30°, 60°, and 90°.

CONCLUSIONS

In this paper, we have presented a novel PHT algorithm and its FPGA implementation architecture for real-time straight line detection in high-definition video sequences. To obtain fewer but accurate candidate edge pixels, we enhance the non-maximum suppression conditions by a resource-optimized Canny edge detection algorithm. For real-time straight line detection purpose on high-definition video sequences, a novel spatial angle-level PHT algorithm and the corresponding multi-level pipelined PHT hardware architecture are proposed.

This gives us an advantage over existing methods which rely on increasing processor frequency. The proposed algorithm and architecture have been evaluated on the ALTERA DE2-115 evaluation platform with a Cyclone IV EP4CE115F29 FPGA. Quantitative results, including throughput, maximum error, memory access bandwidth, and computational time, on 1, 024 × 768 resolution videos are presented and compared with four representative algorithms on different hardware platforms.

Due to the PHT software algorithm and its implemented architecture associated optimization, we are not limited just to estimate straight line parameters fast and accurately in high-definition video sequences. This robust and effective embedded vision system has potential applications in various pattern recognition tasks based on high-definition images.

Source: Shanghai Jiao Tong University

Authors: Xiaofeng Lu | Li Song | Sumin Shen | Kang He | Songyu Yu | Nam Ling